ACI & NSX: Part 1 – Diving Deep into Cisco’s ACI

This is the second post in a weekly, ongoing, deep dive into the subject of segmentation. It is also part one of a two-part look at ACI and NSX. Each post in the overarching series on segmentation will be written by a member of Arraya’s technical or tactical teams, focusing on a specific piece of this extremely broad, highly transformational, topic.

Network virtualization is massively disrupting modern data center methodologies. The two major market penetrators are Cisco’s Application Centric Infrastructure (ACI) and VMware’s NSX. Both solutions offer programmable network policy management, inter-site layer 2 workload mobility, and support for physical and virtual workloads. Given the large number of similarities between the two products, it is important to understand some specifics of each technology and understand how their respective designs achieve their marketed value propositions. According to VMware, it is common to implement the technologies in tandem. Each vendor has specific recommendations and guidance for these architectures but their views are somewhat different. It is critical for architects, engineers, and technical business leaders to understand the implications, benefits, and risks when making decisions on implementing these network overlay and underlay designs to achieve a secure and scalable modern data center.

Diving deep in ACI

Cisco ACI is a controller-based physical network underlay and software overlay. Cisco defines their methodology as an integrated overlay approach meaning it includes hardware and software. ACI hardware includes the Cisco Nexus 9000 family and a proprietary Application Policy Infrastructure Controller (APIC) for unified management, policy distribution, and control plane.

The ACI fabric construct is a spine leaf architecture with a three server APIC cluster redundantly attached to the network fabric. Regardless of application or the compute medium (either physical or virtual), ACI pushes all network policies and distributed default gateways (Pervasive Gateway) down to the leaf switches enabling forwarding and filtering decisions at the switch. This is the enabling factor that allows for any workload or any IP address to live anywhere in an ACI data center leveraging simplified and optimized Virtual Extensible LAN (VXLAN). This conjoined IP and security mobility contribute a large portion of the ACI value proposition to modern data centers.

Additionally, as a VXLAN integrated management platform that supports active-active equal cost multi-pathing (ECMP), ACI removes spanning tree loop (STP) prevention. ECMP and the security policy strategy of ACI are major factors in return on investment and total cost of ownership. ECMP removes idle network links waiting for potential failures in the ACI fabric and costly, partially used, link aggregations for throughput. The security methodologies apply traffic security within the fabric at the leaf and spine switches across layer 2 and 3, limiting the amount of network hair-pinning in the data center.

Exploring how else ACI can impact modern organizations

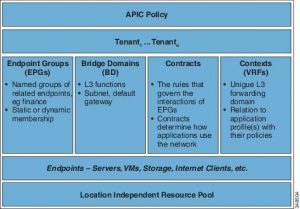

As we continue to deconstruct ACI, let us review the network and application policy constructs. ACI has a hierarchical policy construct matrix with a multi-tenant design as its primary focus. At the core of its traffic control policies, ACI leverages Endpoint Groups (EPG). It does so to group similar workloads and apply application policies or communication policies. The individual communication policies between EPGs are called Contracts. Contracts dictate which communication ports are allowed between EPGs and even individual servers or virtual machines within different EPGs. This is how ACI achieves micro-segmentation. EPGs can be further grouped into an Application Network Profile (ANP). The ANP construct enables quick duplication of application communication policies. For example, an application that has multiple environments used for development and testing. Admins can quickly clone APNs and add servers to new or existing EPGs. These will inherit all of the same communication/security policies. This is a powerful tool for securing and managing common network requirements for VMware environments.

vMotion, IP storage, VSAN replication, Fault Tolerance, and others can all be grouped into EGPs across hypervisor clusters for inter and intra-cluster communications but segmented from one another. This offers a more stringent security posture in a highly regulated or multi-tenant environment.

VXLAN overlay is at the heart of the modern data center’s adoption of network virtualization. It is the latest methodology to achieve IP mobility at any scale. This mobility opens up opportunities for workload resiliency regardless of physical distances. Not to say that latency has lost its grasp on network capabilities. Active-Active workloads with layer 2 adjacency still require no more than 150ms round trip time (RTT). Yet, the stretched layer 2 abilities even for crash consistent recovery is something previously difficult to manage to the point of being a barrier to entry.

Adding BUM traffic into the conversation

ACI does offer operational simplicity to VXLAN and security management efficiencies in a single console. However, there are still plenty of net new route types and protocol extractions inherent to ACI unfamiliar to most engineers. Familiarity with said technologies is important for troubleshooting and management at scale. For example, initial releases of native VXLAN use tunnel endpoint (VTEPS) on layer 2 networks to create layer 2 over layer 3 tunnels. Like any layer 2 technology, VTEPS rely on MAC learning and therefore broadcast flooding within a broadcast domain. This represented a huge step backward from 802.1q broadcast domain efficiencies. While there were multiple steps to the journey, let us jump to where we have landed today. Currently we have a combination of special handling for Broadcast, Unknown Unicast, and Multicast traffic (BUM traffic) multi-protocol Boarder Gateway Protocol (mBGP) and Ethernet VPN (EVPN).

Let’s consider BUM traffic handling. To achieve layer 2 mobility over a layer 3 network, managing MAC address learning is essential. Data plane challenges regarding increased broadcast traffic and un-scalable MAC tables remain. Multicast control plane mechanisms offer a distinct advantage for optimization and scalability. Each VXLAN, much like a VLAN, has its own unique ID. A virtual network identifier (VNI). At the spine switches a multicast group is created and participating VNIs associated to the multicast group. mBGP then stores ARP entries at the VTEPs about the hosts belonging to each VNI. This is a complex setup but highly scalable for large multi-site VXLAN implementation. Good news, the ACI APIC controllers handle IGMP and multicast group membership in the spine and leafs. This provides a single learning platform for the data center. Now this complexity returns when attempting to establish multi-site VXLAN deployments if ACI does not exist in both locations. Multiprotocol iBGP / eBGP peers and route reflectors along with Ethernet VPN (EVPN) peering, PIM multicast groups all need be configured manually on the physical network at the non-ACI location. If ACI exists in both locations (Cisco offers ACI Multi-Site), special inter-data center controllers programmatically define and learn about remote sites. This helps span VXLAN awareness and maintain the ease of VXLAN management.

David Mahoney’s post on ACI and NSX will continue next week. In part two of his post, he with a look at NSX and how it works together with ACI. To learn more about segmentation and its role in today’s IT landscape, reach out to our team of experts by visiting: https://www.arrayasolutions.com//contact-us/.